Building Real-Time Recommendations with Kafka, S3, Rockset and Retool

October 21, 2022

Real-time customer 360 applications are essential in allowing departments within a company to have reliable and consistent data on how a customer has engaged with the product and services. Ideally, when someone from a department has engaged with a customer, you want up-to-date information so the customer doesn’t get frustrated and repeat the same information multiple times to different people. Also, as a company, you can start anticipating the customers’ needs. It’s part of building a stellar customer experience, where customers want to keep coming back, and you start building customer champions. Customer experience is part of the journey of building loyal customers. To start this journey, you need to capture how customers have interacted with the platform: what they’ve clicked on, what they’ve added to their cart, what they’ve removed, and so on.

When building a real-time customer 360 app, you’ll definitely need event data from a streaming data source, like Kafka. You’ll also need a transactional database to store customers’ transactions and personal information. Finally, you may want to combine some historical data from customers’ prior interactions as well. From here, you’ll want to analyze the event, transactional, and historical data in order to understand their trends, build personalized recommendations, and begin anticipating their needs at a much more granular level.

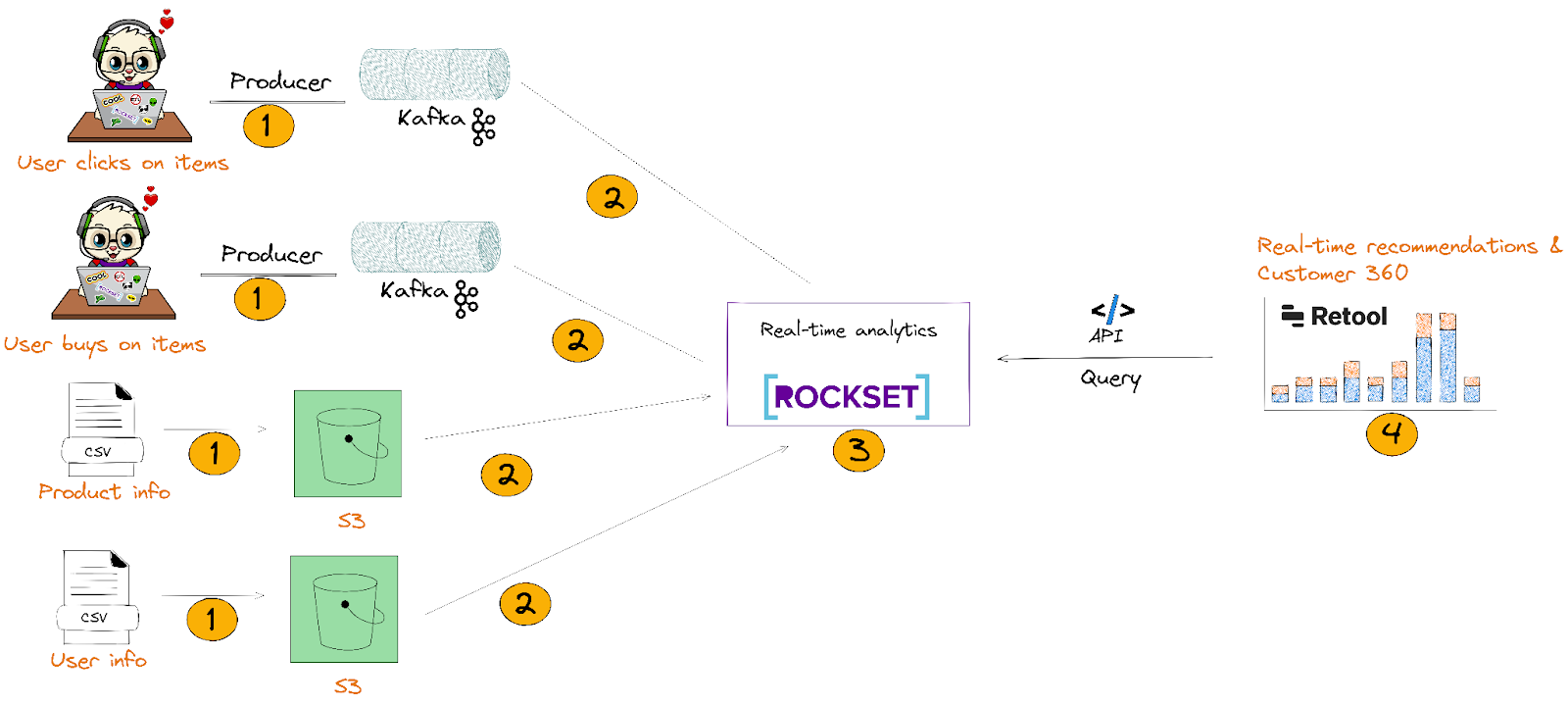

We’ll be building a basic version of this using Kafka, S3, Rockset, and Retool. The idea here is to show you how to integrate real-time data with data that’s static/historical to build a comprehensive real-time customer 360 app that gets updated within seconds:

- We’ll send clickstream and CSV data to Kafka and AWS S3 respectively.

- We’ll integrate with Kafka and S3 through Rockset’s data connectors. This allows Rockset to automatically ingest and index JSON i.e.nested semi-structured data without flattening it.

- In the Rockset Query Editor, we’ll write complex SQL queries that JOIN, aggregate, and search data from Kafka and S3 to build real-time recommendations and customer 360 profiles. From there, we’ll create data APIs that’ll be used in Retool (step 4).

- Finally, we’ll build a real-time customer 360 app with the internal tools on Retool that’ll execute Rockset’s Query Lambdas. We’ll see the customer’s 360 profile that’ll include their product recommendations.

Key requirements for building a real-time customer 360 app with recommendations

Streaming data source to capture customer’s activities: We’ll need a streaming data source to capture what grocery items customers are clicking on, adding to their cart, and much more. We’re working with Kafka because it has a high fanout and it’s easy to work with many ecosystems.

Real-time database that handles bursty data streams: You need a database that separates ingest compute, query compute, and storage. By separating these services, you can scale the writes independently from the reads. Typically, if you couple compute and storage, high write rates can slow the reads, and decrease query performance. Rockset is one of the few databases that separate ingest and query compute, and storage.

Real-time database that handles out-of-order events: You need a mutable database to update, insert, or delete records. Again, Rockset is one of the few real-time analytics databases that avoids expensive merge operations.

Internal tools for operational analytics: I chose Retool because it’s easy to integrate and use APIs as a resource to display the query results. Retool also has an automatic refresh, where you can continually refresh the internal tools every second.

Let’s build our app using Kafka, S3, Rockset, and Retool

So, about the data

Event data to be sent to Kafka In our example, we’re building a recommendation of what grocery items our user can consider buying. We created 2 separate event data in Mockaroo that we’ll send to Kafka:

-

user_activity_v1

- This is where users add, remove, or view grocery items in their cart.

-

user_purchases_v1

- These are purchases made by the customer. Each purchase has the amount, a list of items they bought, and the type of card they used.

You can read more about how we created the data set in the workshop.

S3 data set

We have 2 public buckets:

-

1 bucket contains grocery information

- The bucket contains the product id, product name, description, and some other minor information.

-

1 bucket contains the users' information

- The bucket contains the user’s id, name, address, and other minor information.

Send event data to Kafka

The easiest way to get set up is to create a Confluent Cloud cluster with 2 Kafka topics:

- user_activity

- user_purchases

Alternatively, you can find instructions on how to set up the cluster in the Confluent-Rockset workshop.

You’ll want to send data to the Kafka stream by modifying this script on the Confluent repo. In my workshop, I used Mockaroo data and sent that to Kafka. You can follow the workshop link to get started with Mockaroo and Kafka!

S3 public bucket availability

The 2 public buckets are already available. When we get to the Rockset portion, you can plug in the S3 URI to populate the collection. No action is needed on your end.

Getting started with Rockset

You can follow the instructions on creating an account.

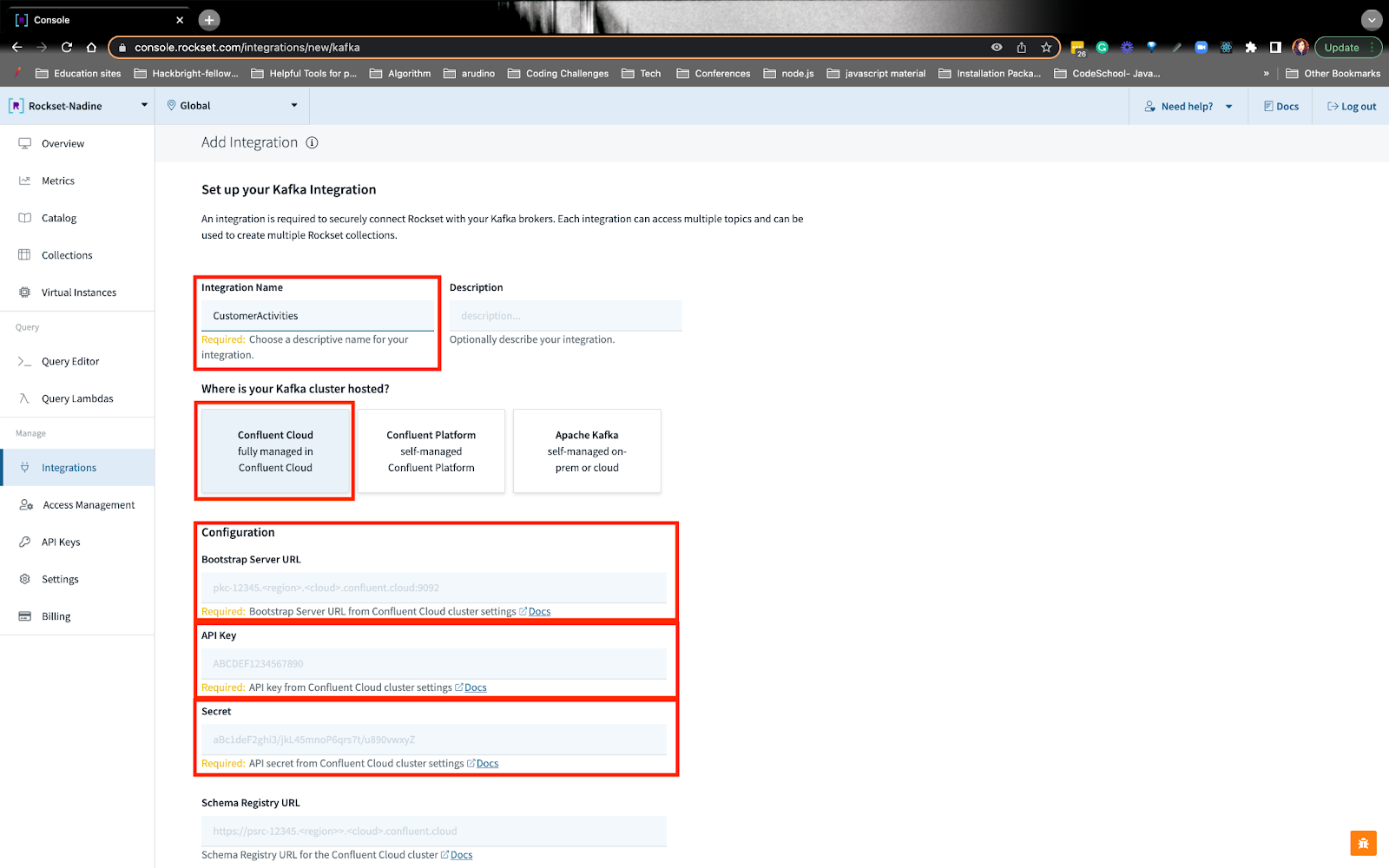

Create a Confluent Cloud integration on Rockset

In order for Rockset to read the data from Kafka, you have to give it read permissions. You can follow the instructions on creating an integration to the Confluent Cloud cluster. All you’ll need to do is plug in the bootstrap-url and API keys:

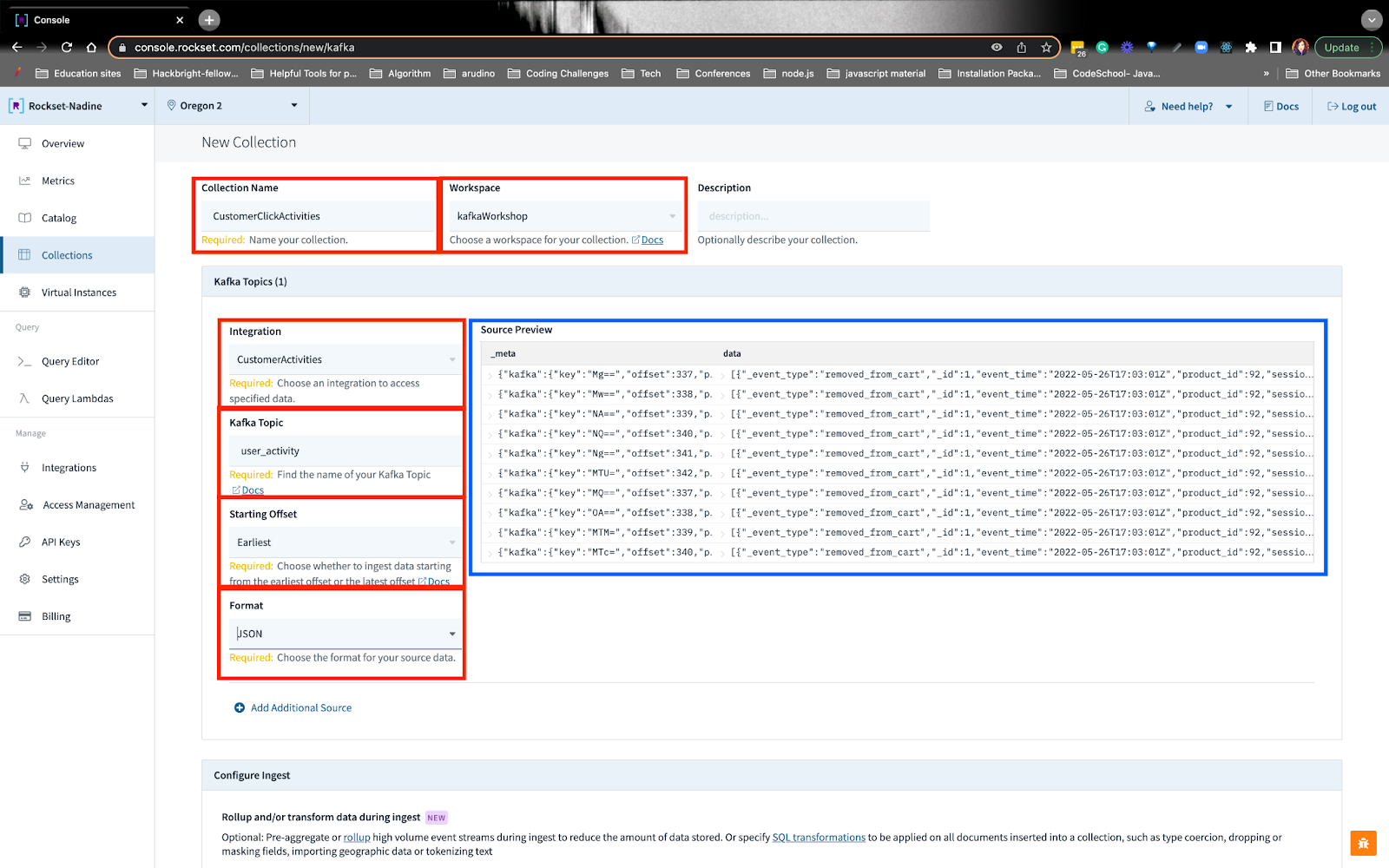

Create Rockset collections with transformed Kafka and S3 data

For the Kafka data source, you’ll put in the integration name we created earlier, topic name, offset, and format. When you do this, you’ll see the preview.

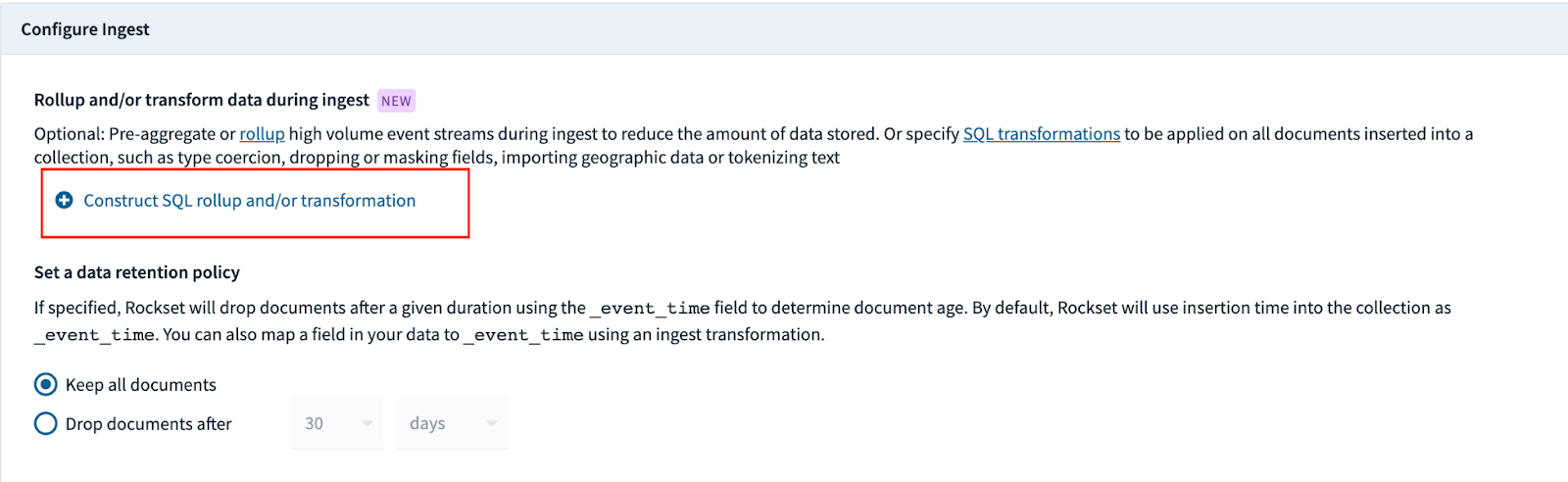

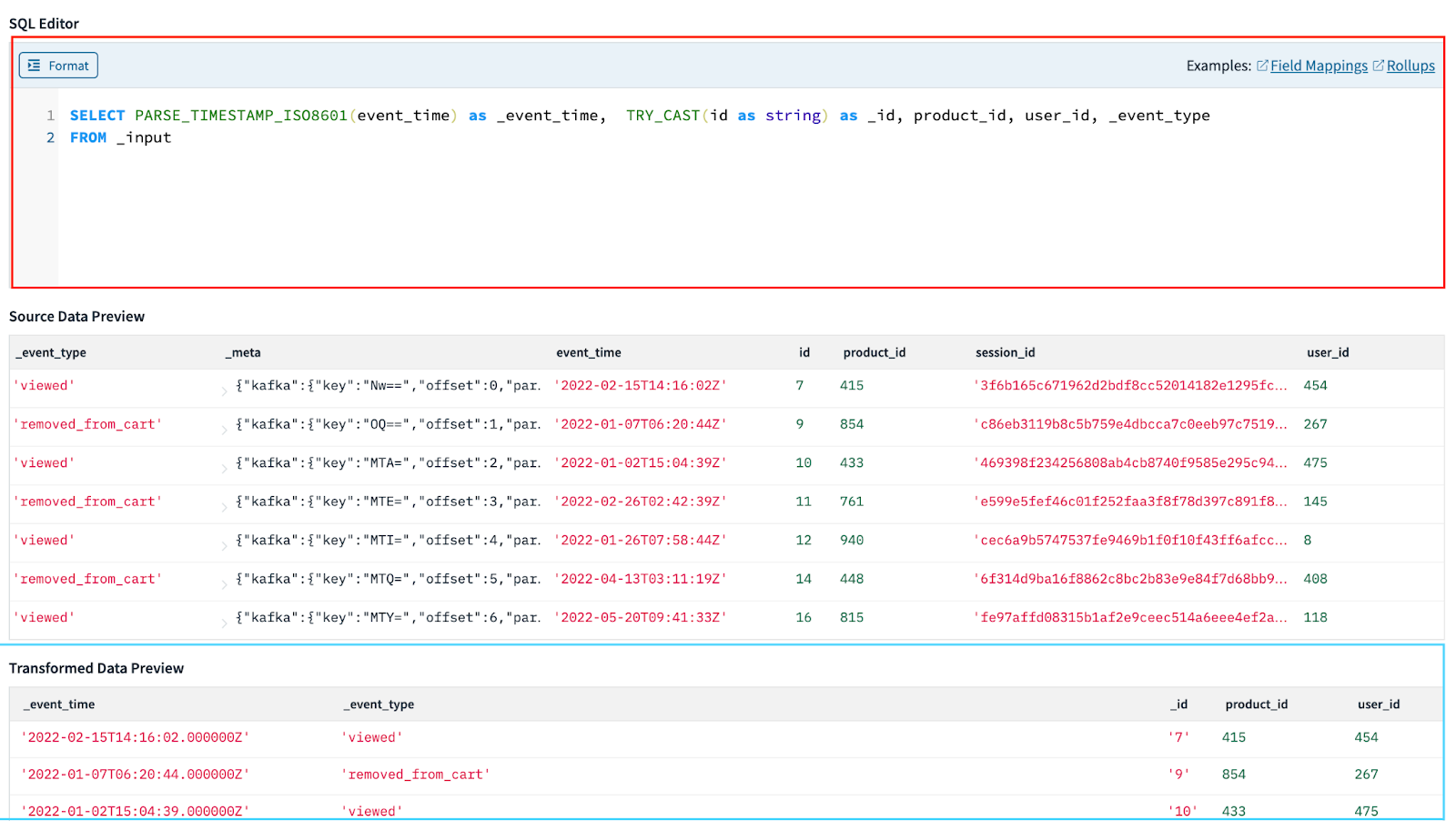

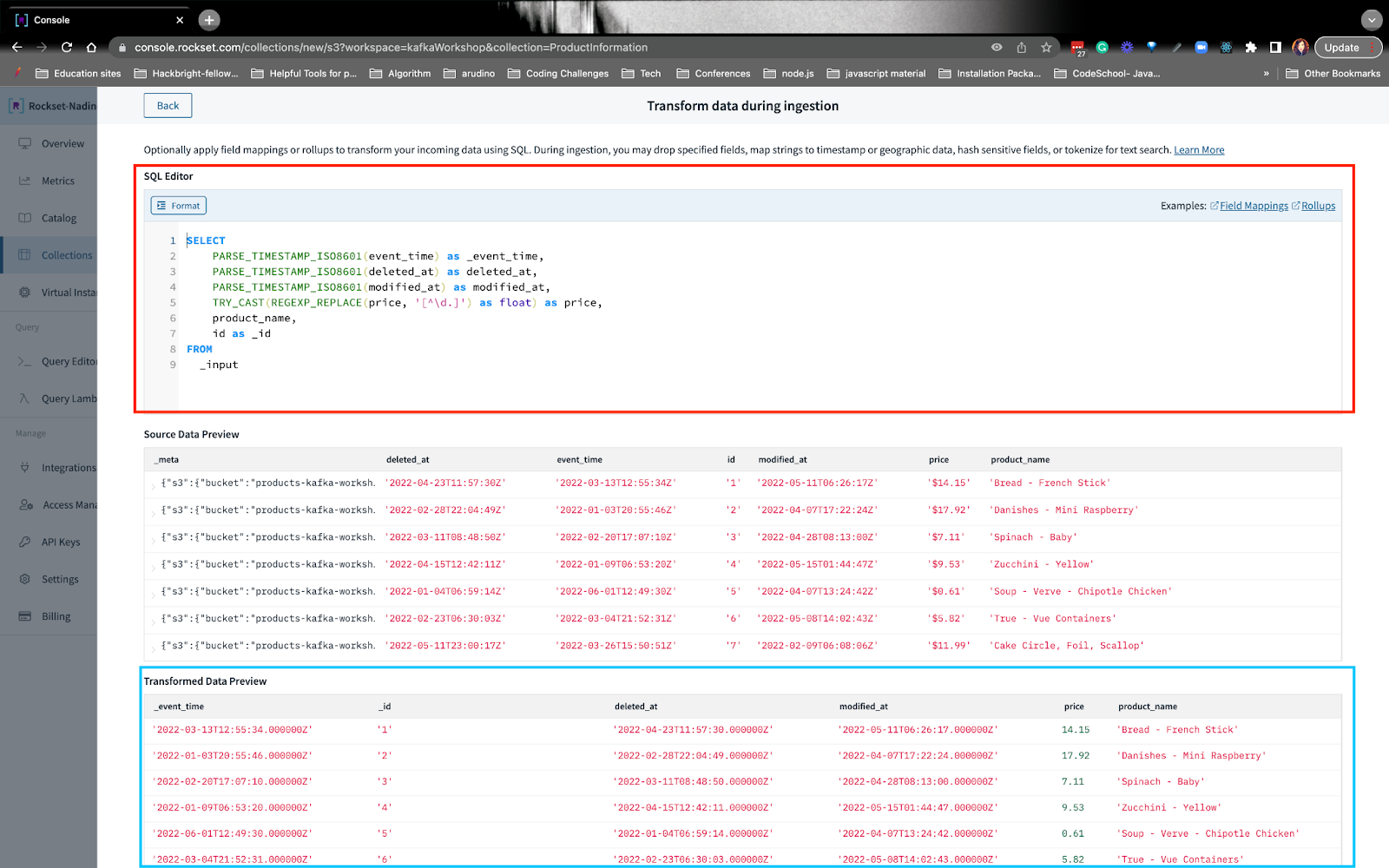

Towards the bottom of the collection, there’s a section where you can transform data as it is being ingested into Rockset:

From here, you can write SQL statements to transform the data:

In this example, I want to point out that we are remapping eventtime to \eventtime. Rockset associates a timestamp with each document in a field named \eventtime. If an \event_time is not provided when you insert a doc, Rockset provides it as the time the data was ingested because queries on this field are significantly faster than similar queries on regularly-indexed fields.

When you’re done writing the SQL transformation query, you can apply the transformation and create the collection.

We’re going to also be transforming the Kafka topic user_purchases, in a similar fashion I just explained here. You can follow for more details on how we transformed and created the collection from these Kafka topics.

S3

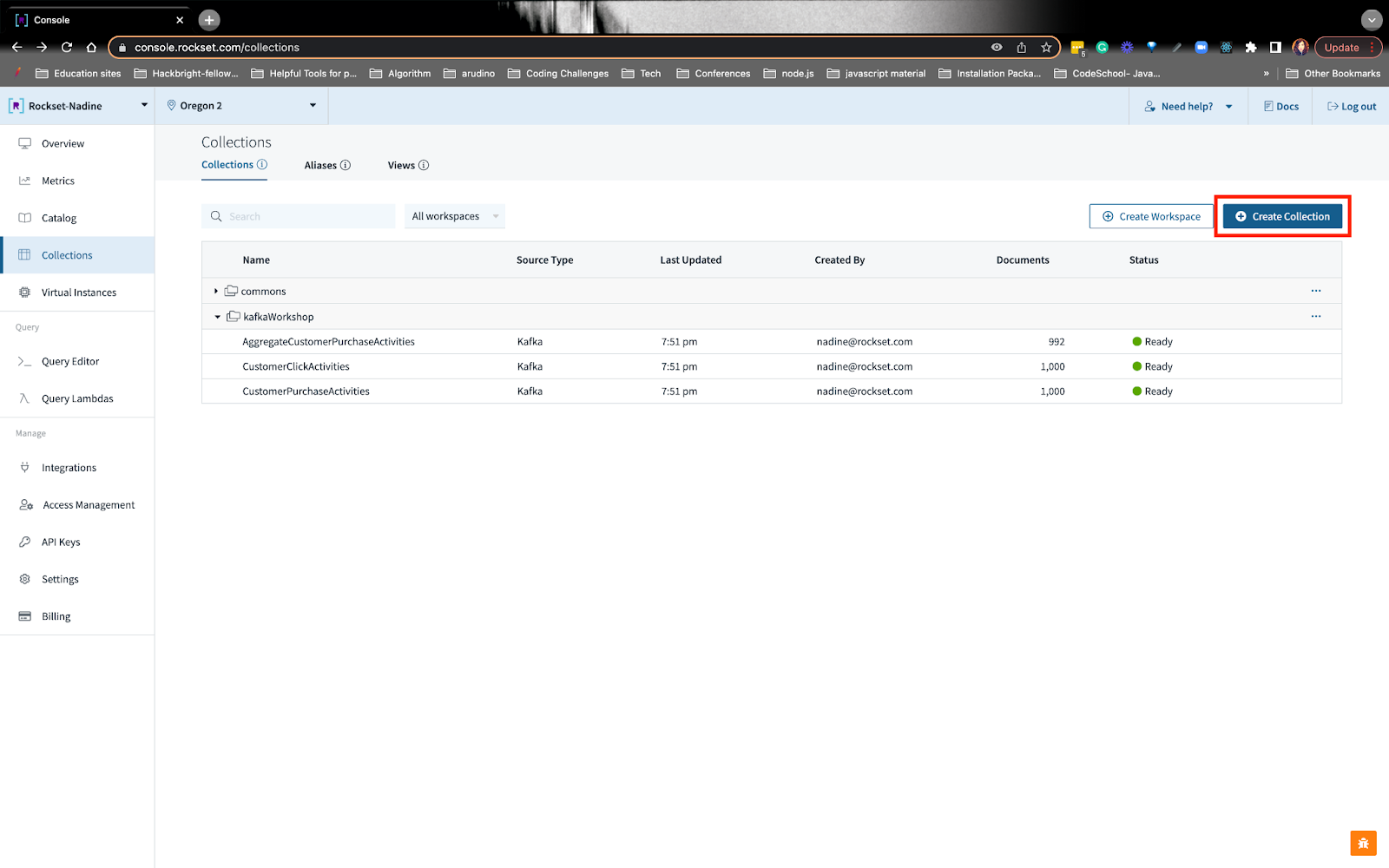

To get started with the public S3 bucket, you can navigate to the collections tab and create a collection:

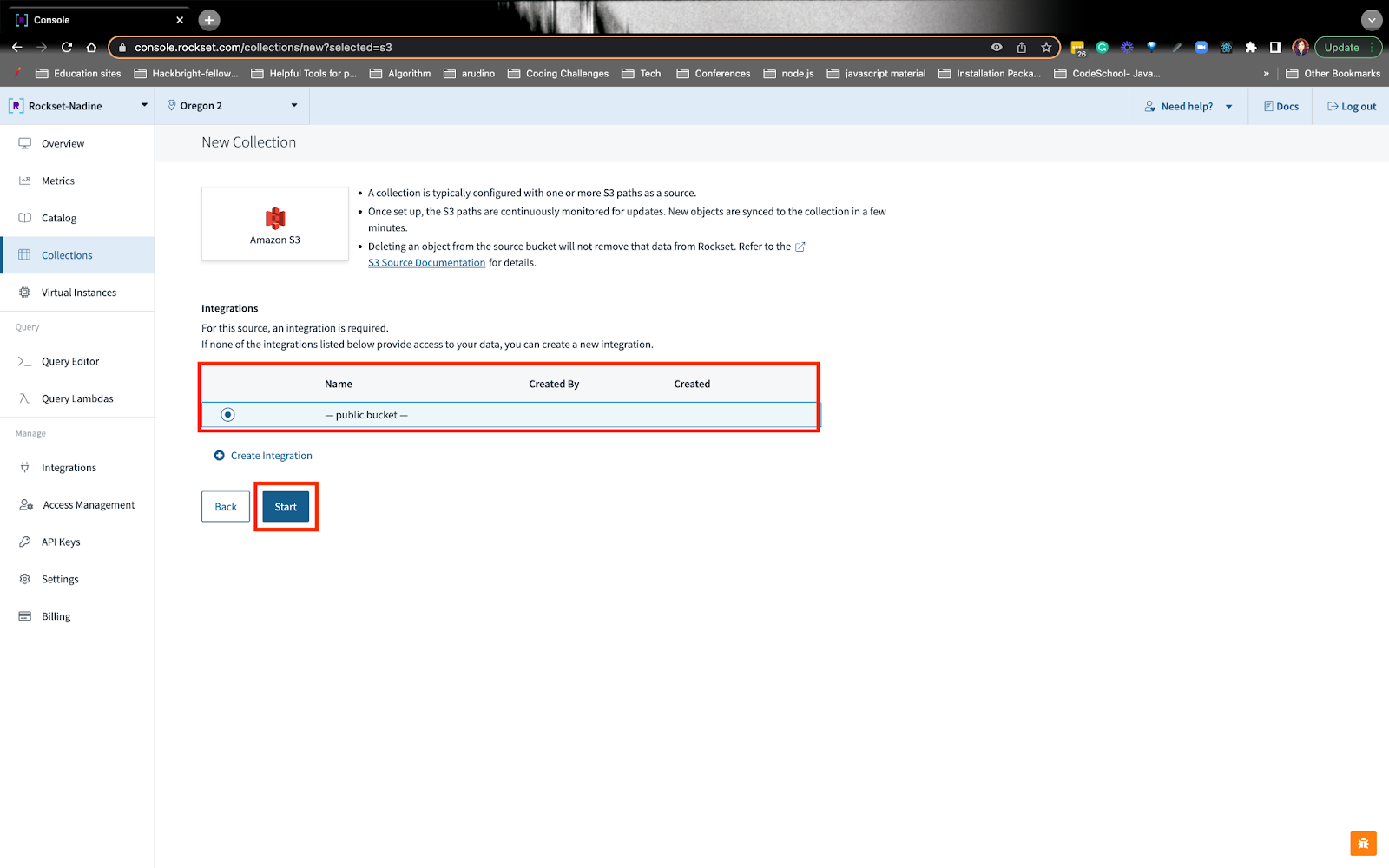

You can choose the S3 option and pick the public S3 bucket:

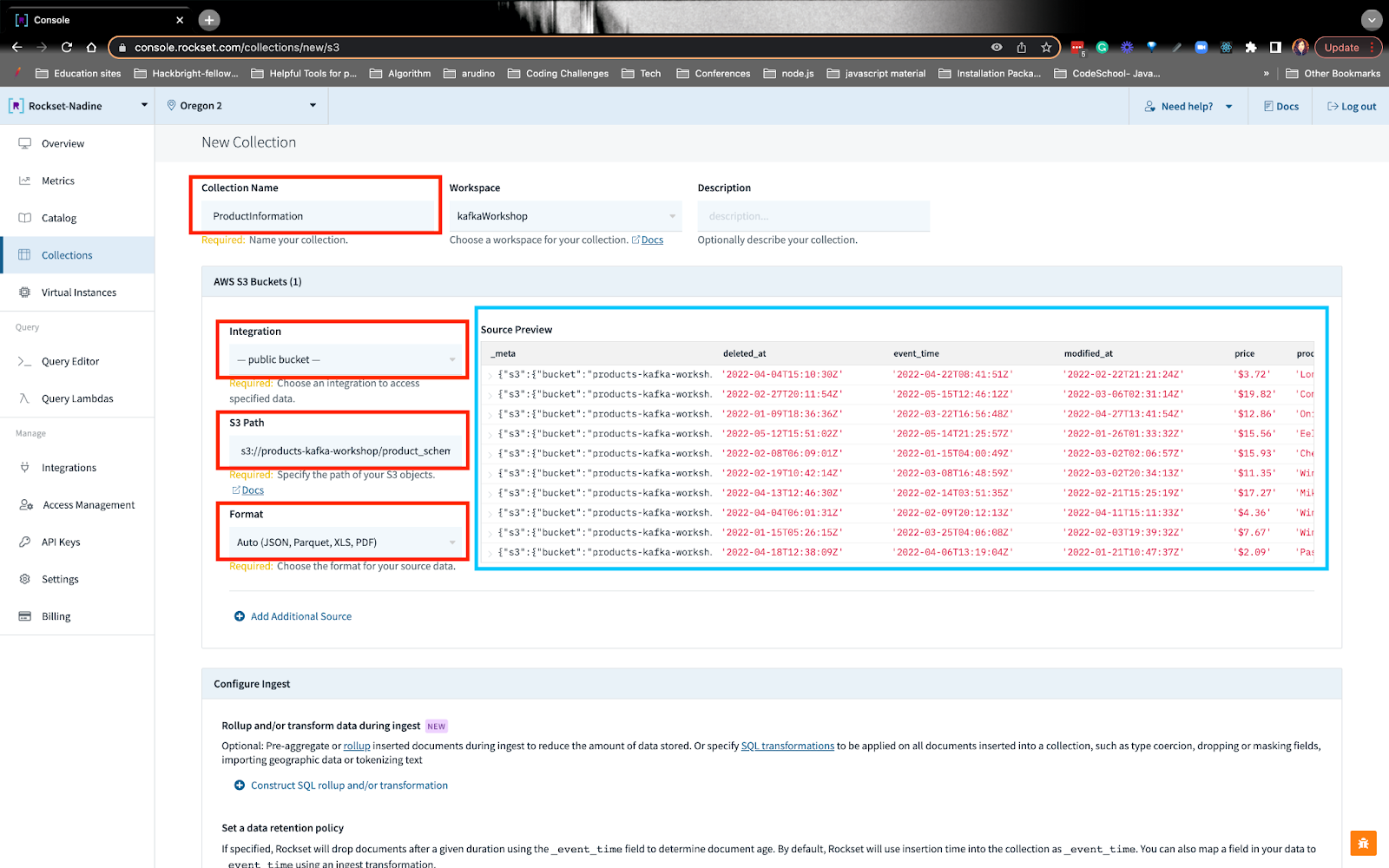

From here, you can fill in the details, including the S3 path URI and see the source preview:

Similar to before, we can create SQL transformations on the S3 data:

You can follow how we wrote the SQL transformations.

Build a real-time recommendation query on Rockset

Once you’ve created all the collections, we’re ready to write our recommendation query! In the query, we want to build a recommendation of items based on the activities since their last purchase. We’re building the recommendation by gathering other items users have purchased along with the item the user was interested in since their last purchase.

You can follow exactly how we build this query. I’ll summarize the steps below.

Step 1: Find the user’s last purchase date

We’ll need to order their purchase activities in descending order and grab the latest date. You’ll notice on line 8 we are using a parameter :userid. When we make a request, we can write the userid we want in the request body.

Step 2: Grab the customer’s latest activities since their last purchase

Here, we’re writing a CTE, common table expression, where we can find the activities since their last purchase. You’ll notice on line 24 we are only interested in the activity _eventtime that’s greater than the purchase \event_time.

Step 3: Find previous purchases that contain the customer's items

We’ll want to find all the purchases that other people have bought, that contain the customer’s items. From here we can see what items our customer will likely buy. The key thing I want to point out is on line 44: we use ARRAY_CONTAINS() to find the item of interest and see what other purchases have this item.

Step 4: Aggregate all the purchases by unnesting an array

We’ll want to see the items that have been purchased along with the customer’s item of interest. In step 3, we got an array of all the purchases, but we can’t aggregate the product IDs just yet. We need to flatten the array and then aggregate the product IDs to see which product the customer will be interested in. On line 52 we UNNEST() the array and on line 49 we COUNT(*) on how many times the product ID reoccurs. The top product IDs with the most count, excluding the product of interest, are the items we can recommend to the customer.

Step 5: Filter results so it doesn't contain the product of interest

On line 63-69 we filter out the customer’s product of interest by using NOT IN().

Step 6: Identify the product ID with the product name

Product IDs can only go so far- we need to know the product names so the customer can search through the e-commerce site and potentially add it to their cart. On line 77 we use join the S3 public bucket that contains the product information with the Kafka data that contains the purchase information via the product IDs.

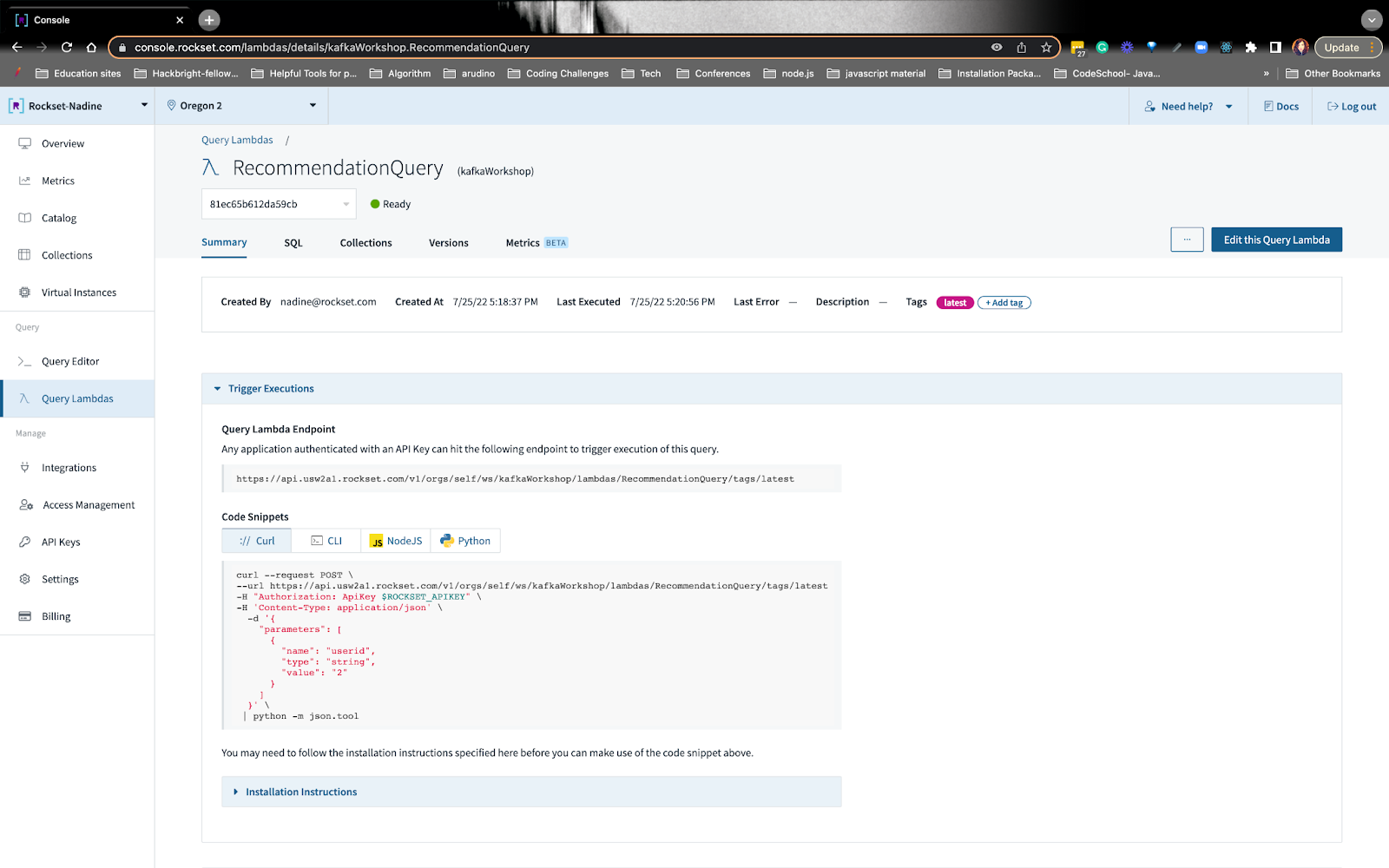

Step 7: Create a Query Lambda

On the Query Editor, you can turn the recommendation query into an API endpoint. Rockset automatically generates the API point, and it’ll look like this:

We’re going to use this endpoint on Retool.

That wraps up the recommendation query! We wrote some other queries that you can explore on the workshop page, like getting the user’s average purchase price and total spend!

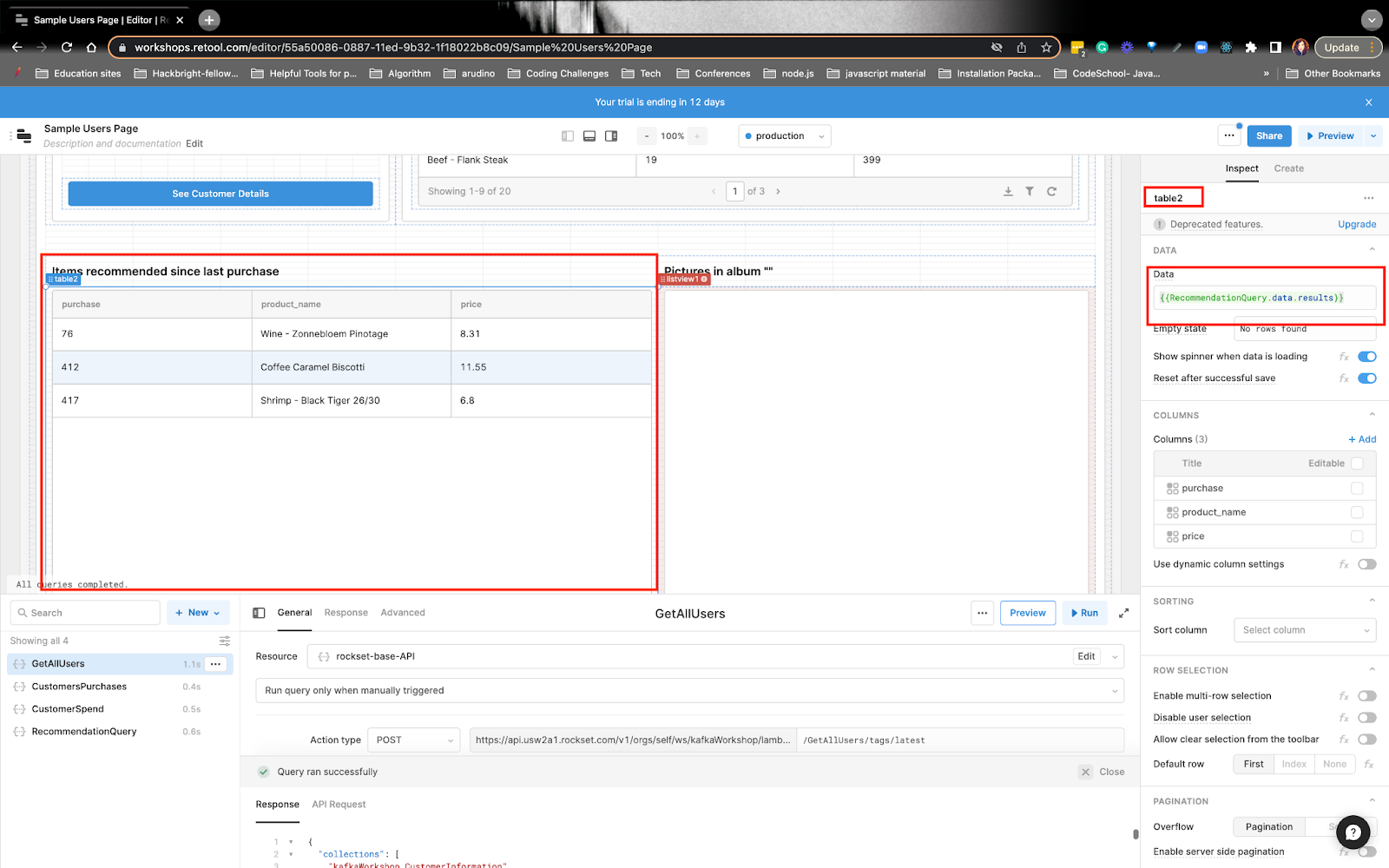

Finish building the app in Retool with data from Rockset

Retool is great for building internal tools. Here, customer service agents or other team members can easily access the data and assist customers. The data that’ll be displayed on Retool will be coming from the Rockset queries we wrote. Anytime Retool sends a request to Rockset, Rockset returns the results, and Retool displays the data.

You can get the full scoop on how we will build on Retool.

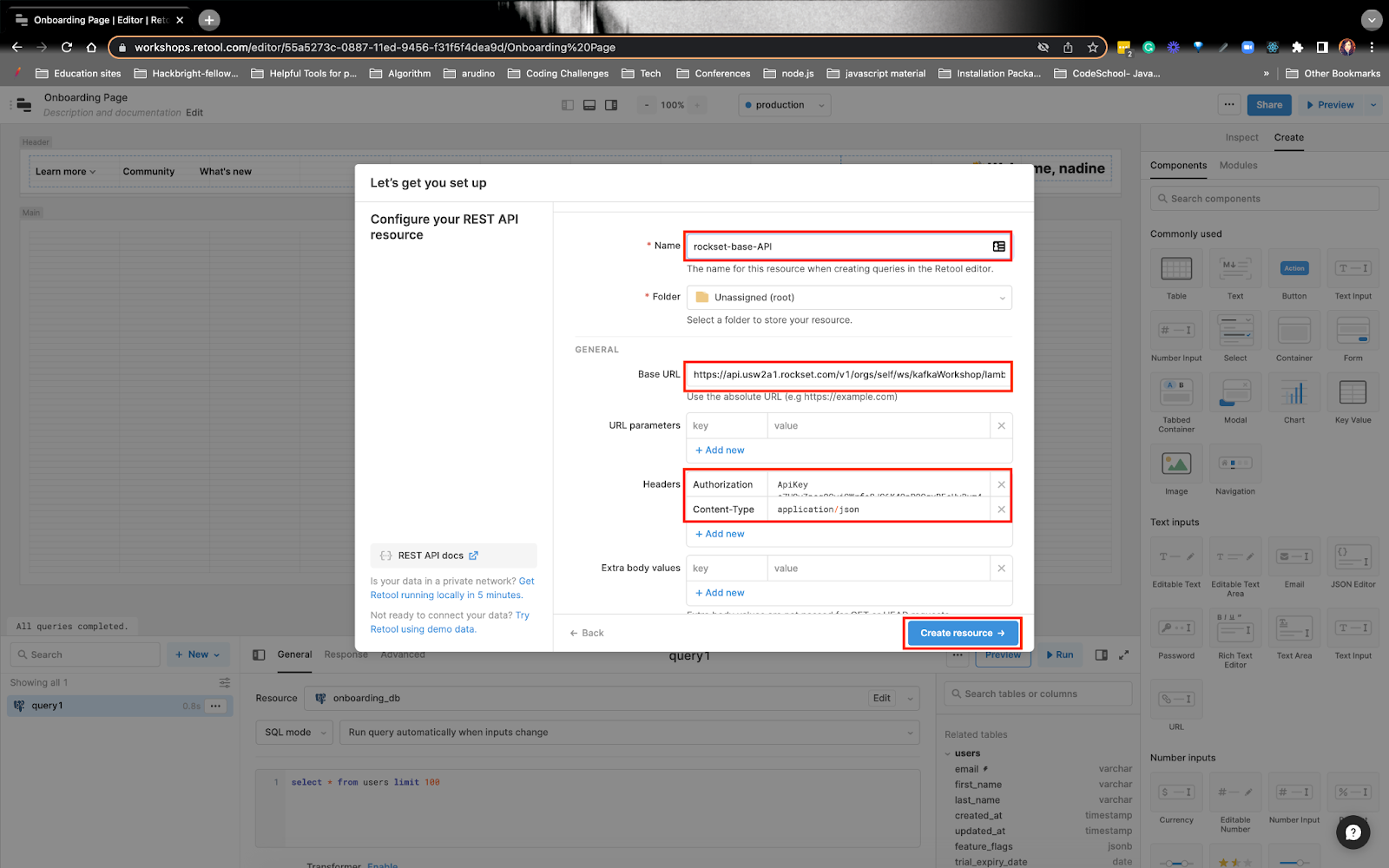

Once you create your account, you’ll want to set up the resource endpoint. You’ll want to choose the API option and set up the resource:

You’ll want to give the resource a name, here I named it rockset-base-API.

You’ll see under the Base URL, I put the Query Lambda endpoint up to the lambda portion - I didn’t put the whole endpoint. Example:

- https://api.usw2a1.rockset.com/v1/orgs/self/ws/kafkaWorkshop/lambdas

- I’ll add the rest of the endpoint later on

Under Headers, I put the Authorization and Content-Type values.

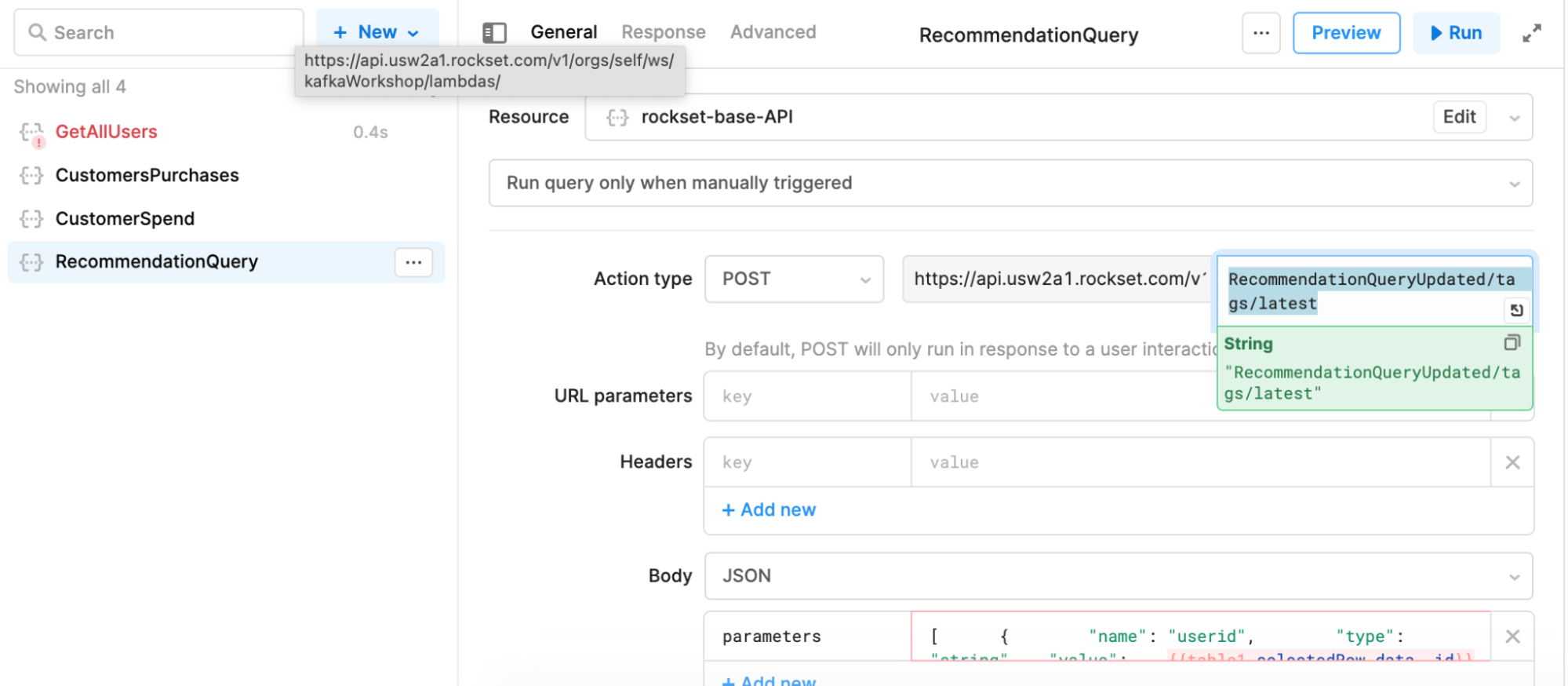

Now, you’ll need to create the resource query. You’ll want to choose the rockset-base-API as the resource and on the second half of the resource, you’ll put everything else that comes after lambdas portion. Example:

- RecommendationQueryUpdated/tags/latest

Under the parameters section, you’ll want to dynamically update the userid.

After you create the resource, you’ll want to add a table UI component and update it to reflect the user’s recommendation:

You can follow how we built the real-time customer app on Retool.

This wraps up how we built a real-time customer 360 app with Kafka, S3, Rockset, and Retool. If you have any questions or comments, definitely reach out to the Rockset Community.