5 Tasks You Can Automate in Rockset Using Scheduled Query Lambdas

August 28, 2023

Why and what to automate

As application developers and architects, whenever we see repeating tasks, we immediately think about how to automate them. This simplifies our daily work and allows us to be more efficient and focused on delivering value to the business.

Typical examples of repeating tasks include scaling compute resources to optimize their usage from a cost and performance perspective, sending automated e-mails or Slack messages with results of a SQL query, materializing views or doing periodic copies of data for development purposes, exporting data to S3 buckets, and so on.

How Rockset helps with automation

Rockset offers a set of powerful features to help automate common tasks in building and managing data solutions:

- a rich set of APIs so that every aspect of the platform can be controlled through REST

- Query Lambdas - which are REST API wrappers around your parametrized SQL queries, hosted on Rockset

- scheduling of Query Lambdas - a recently introduced feature where you can create schedules for automatic execution of your query lambdas and post results of those queries to webhooks

- compute-compute separation (along with a shared storage layer) which allows isolation and independent scaling of compute resources

Let’s deep dive into why these are helpful for automation.

Rockset APIs allow you to interact with all of your resources - from creating integrations and collections, to creating virtual instances, resizing, pausing and resuming them, to running query lambdas and plain SQL queries.

Query Lambdas offer a nice and easy to use way to decouple consumers of data from the underlying SQL queries so that you can keep your business logic in one place with full source control, versioning and hosting on Rockset.

Scheduled execution of query lambdas enables you to create cron schedules that can automatically execute query lambdas and optionally post the results of those queries to webhooks. These webhooks can be hosted externally to Rockset (to further automate your workflow, for example to write data back to a source system or send an e-mail), but you can also call Rockset APIs and perform tasks like virtual instance resizing or even creating or resuming a virtual instance.

Compute-compute separation allows you to have dedicated, isolated compute resources (virtual instances) per use case. This means you can independently scale and size your ingestion VI and one or more secondary VIs that are used for querying data. Rockset is the first real-time analytics database to offer this feature.

With the combination of these features, you can automate everything you need (except maybe brewing your coffee)!

Typical use cases for automation

Let’s now take a look into typical use cases for automation and show how you would implement them in Rockset.

Use case 1: Sending automated alerts

Often times, there are requirements to deliver automated alerts throughout the day with results of SQL queries. These can be either business related (like common KPIs that the business is interested in) or more technical (like finding out how many queries ran slower than 3 seconds).

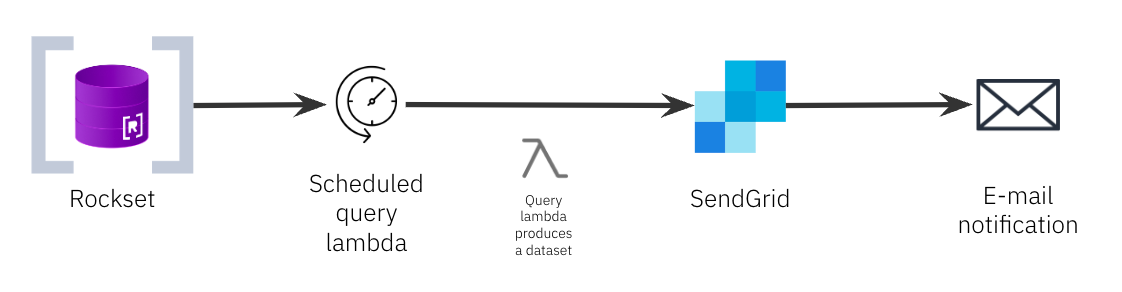

Using scheduled query lambdas, we can run a SQL query against Rockset and post the results of that query to an external endpoint such as an e-mail provider or Slack.

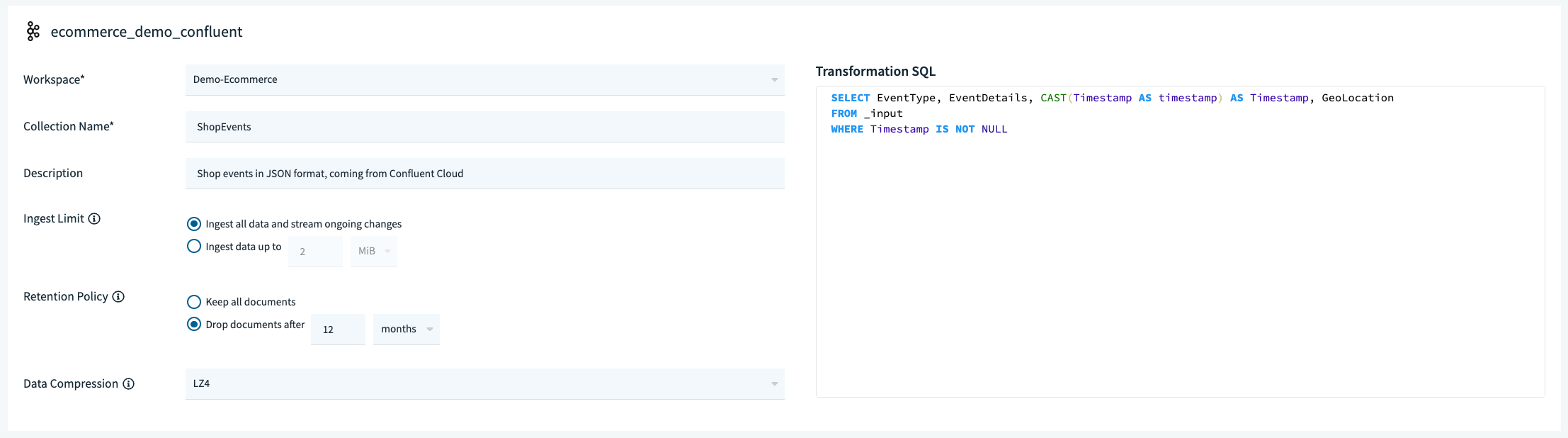

Let’s look at an e-commerce example. We have a collection called ShopEvents with raw real-time events from a webshop. Here we track every click to every product in our webshop, and then ingest this data into Rockset via Confluent Cloud. We are interested in knowing how many items were sold on our webshop today and we want to deliver this data via e-mail to our business users every six hours.

We’ll create a query lambda with the following SQL query on our ShopEvents collection:

SELECT

COUNT(*) As ItemsSold

FROM

"Demo-Ecommerce".ShopEvents

WHERE

Timestamp >= CURRENT_DATE() AND EventType = 'Checkout';

We’ll then use SendGrid to send an e-mail with the results of that query. We won’t go through the steps of setting up SendGrid, you can follow that in their documentation.

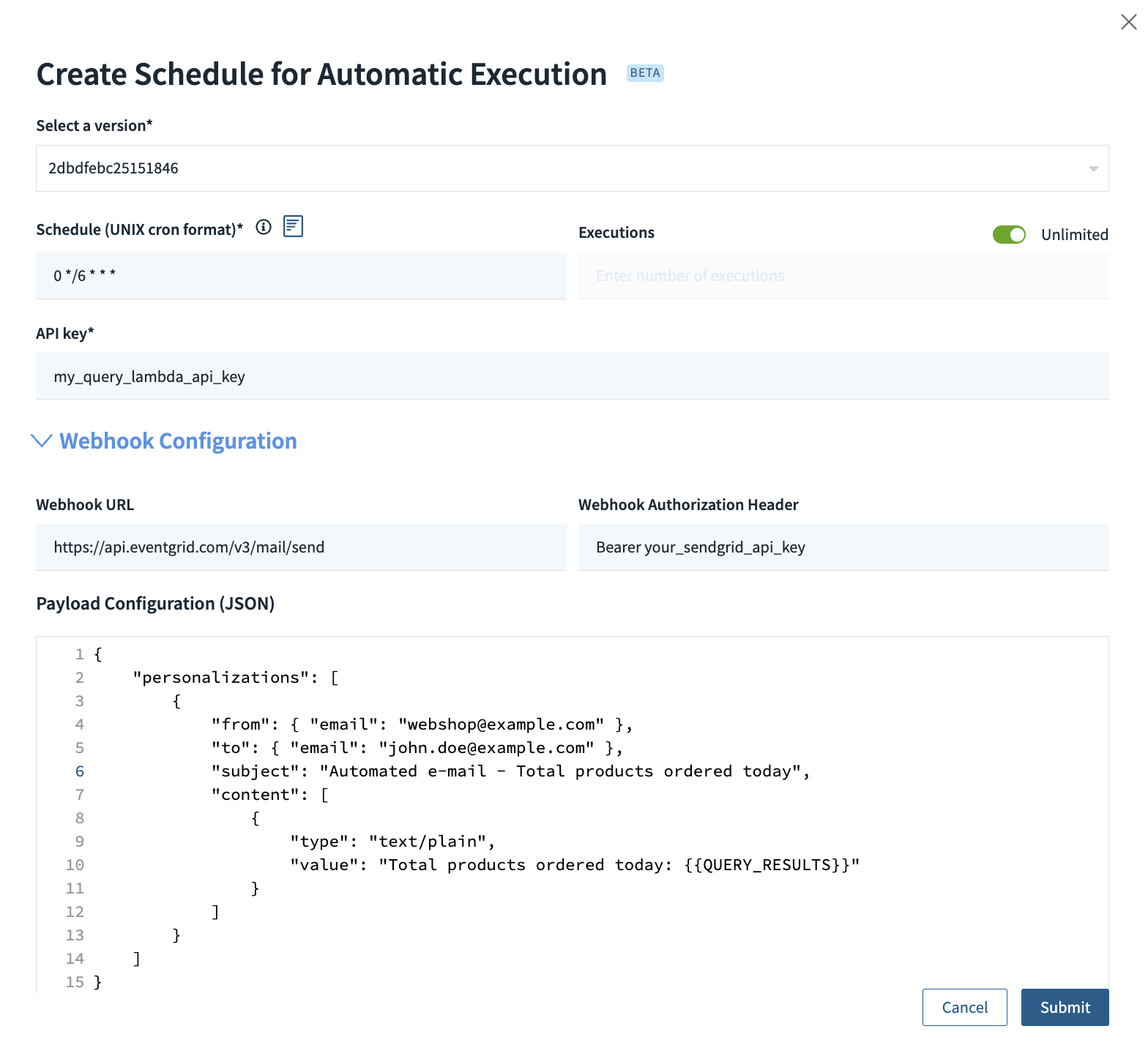

Once you’ve got an API key from SendGrid, you can create a schedule for your query lambda like this, with a cron schedule of 0 */6 * * * for every 6 hours:

This will call the SendGrid REST API every 6 hours and will trigger sending an e-mail with the total number of sold items that day.

{{QUERY_ID}} and {{QUERY_RESULTS}} are template values that Rockset provides automatically for scheduled query lambdas so that you can use the ID of the query and the resulting dataset in your webhook calls. In this case, we’re only interested in the query results.

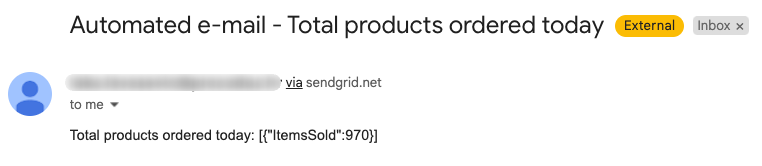

After enabling this schedule, this is what you’ll get in your inbox:

You could do the same with Slack API or any other provider that accepts POST requests and Authorization headers and you’ve got your automated alerts set up!

If you’re interested in sending alerts for slow queries, look at setting up Query Logs where you can see a list of historical queries and their performance.

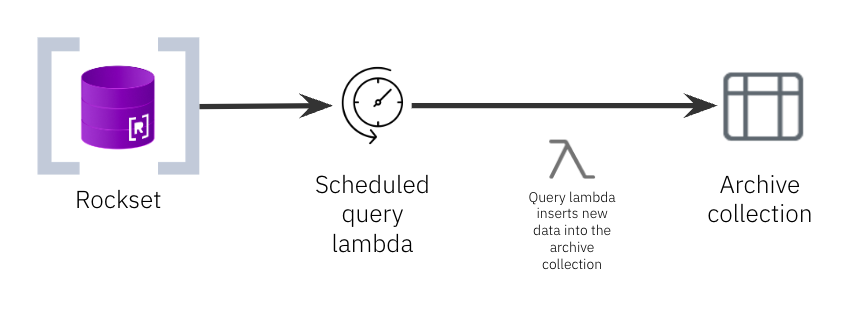

Use case 2: Creating materialized views or development datasets

Rockset supports automatic real-time rollups on ingestion for some data sources. However, if you have a need to create additional materialized views with more complex logic or if you need to have a copy of your data for other purposes (like archival, development of new features, etc.), you can do it periodically by using an INSERT INTO scheduled query lambda. INSERT INTO is a nice way to insert the results of a SQL query into an existing collection (it could be the same collection or a completely different one).

Let’s again look at our e-commerce example. We have a data retention policy set on our ShopEvents collection so that events that are older than 12 months automatically get removed from Rockset.

However, for sales analytics purposes, we want to keep a copy of specific events, where the event was a product order. For this, we’ll create a new collection called OrdersAnalytics without any data retention policy. We’ll then periodically insert data into this collection from the raw events collection before the data gets purged.

We can do this by creating a SQL query that will get all Checkout events for the previous day:

INSERT INTO "Demo-Ecommerce".OrdersAnalytics

SELECT

e.EventId AS _id,

e.Timestamp,

e.EventType,

e.EventDetails,

e.GeoLocation,

FROM

"Demo-Ecommerce".ShopEvents e

WHERE

e.Timestamp BETWEEN CURRENT_DATE() - DAYS(1) AND CURRENT_DATE()

AND e.EventType = 'Checkout';

Note the _id field we are using in this query - this will ensure that we don’t get any duplicates in our orders collection. Check out how Rockset automatically handles upserts here.

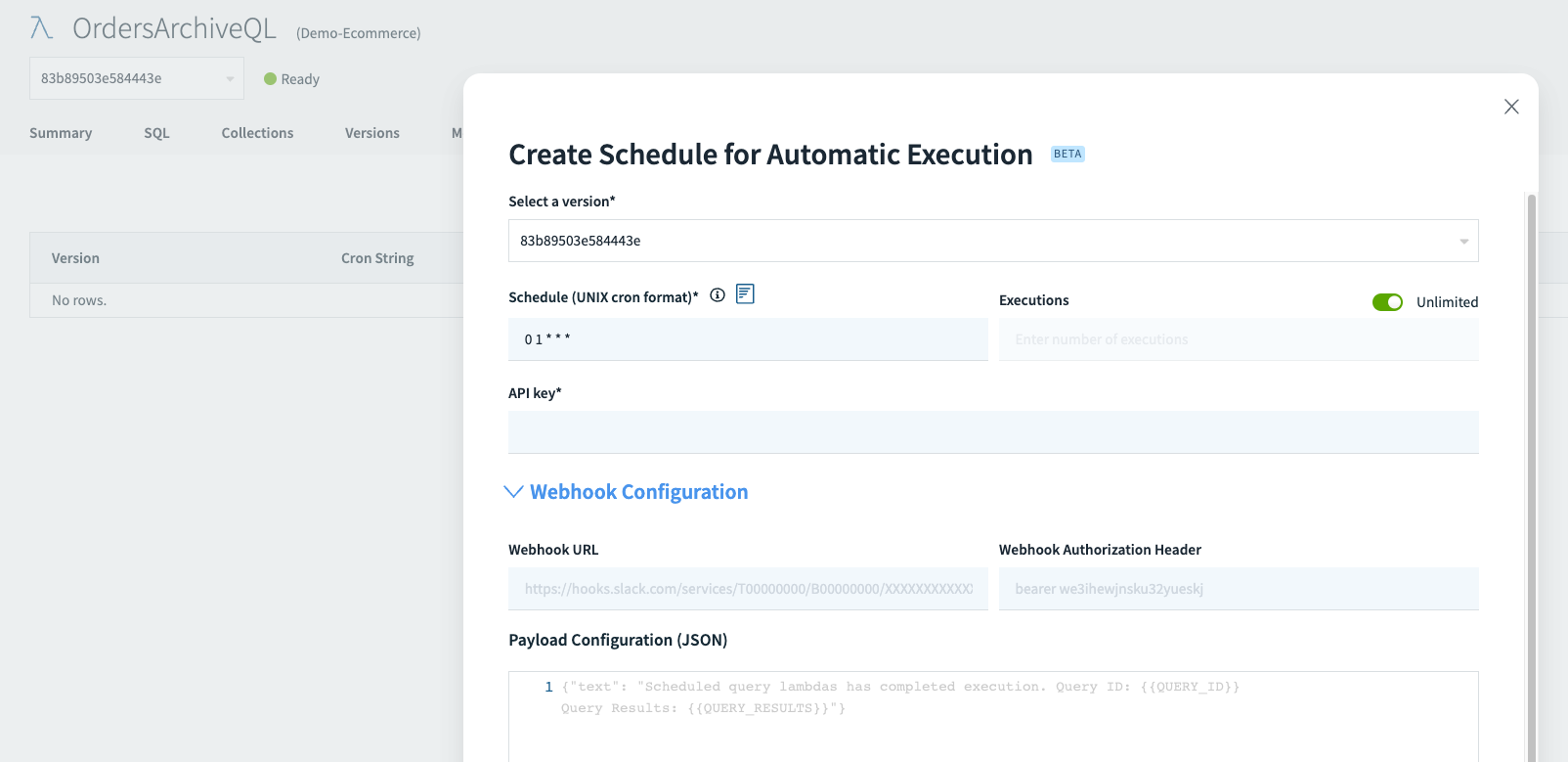

Then we create a query lambda with this SQL query syntax, and create a schedule to run this once a day at 1 AM, with a cron schedule 0 1 * * *. We don’t need to do anything with a webhook, so this part of the schedule definition is empty.

That’s it - now we’ll have daily product orders saved in our OrdersAnalytics collection, ready for use.

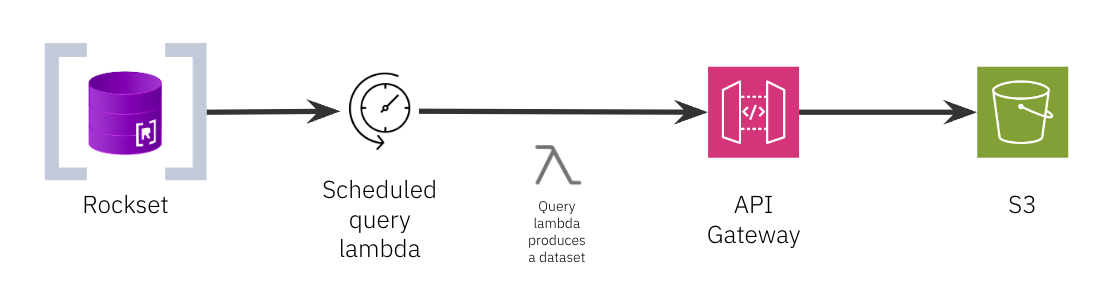

Use case 3: Periodic exporting of data to S3

You can use scheduled query lambdas to periodically execute a SQL query and export the results of that query to a destination of your choice, such as an S3 bucket. This is useful for scenarios where you need to export data on a regular basis, such as backing up data, creating reports or feeding data into downstream systems.

In this example, we will again work on our e-commerce dataset and we’ll leverage AWS API Gateway to create a webhook that our query lambda can call to export the results of a query into an S3 bucket.

Similar to our previous example, we’ll write a SQL query to get all events from the previous day, join that with product metadata and we’ll save this query as a query lambda. This is the dataset we want to periodically export to S3.

SELECT

e.Timestamp,

e.EventType,

e.EventDetails,

e.GeoLocation,

p.ProductName,

p.ProductCategory,

p.ProductDescription,

p.Price

FROM

"Demo-Ecommerce".ShopEvents e

INNER JOIN "Demo-Ecommerce".Products p ON e.EventDetails.ProductID = p._id

WHERE

e.Timestamp BETWEEN CURRENT_DATE() - DAYS(1) AND CURRENT_DATE();

Next, we’ll need to create an S3 bucket and set up AWS API Gateway with an IAM Role and Policy so that the API gateway can write data to S3. In this blog, we’ll focus on the API gateway part - be sure to check the AWS documentation on how to create an S3 bucket and the IAM role and policy.

Follow these steps to set up AWS API Gateway so it’s ready to communicate with our scheduled query lambda:

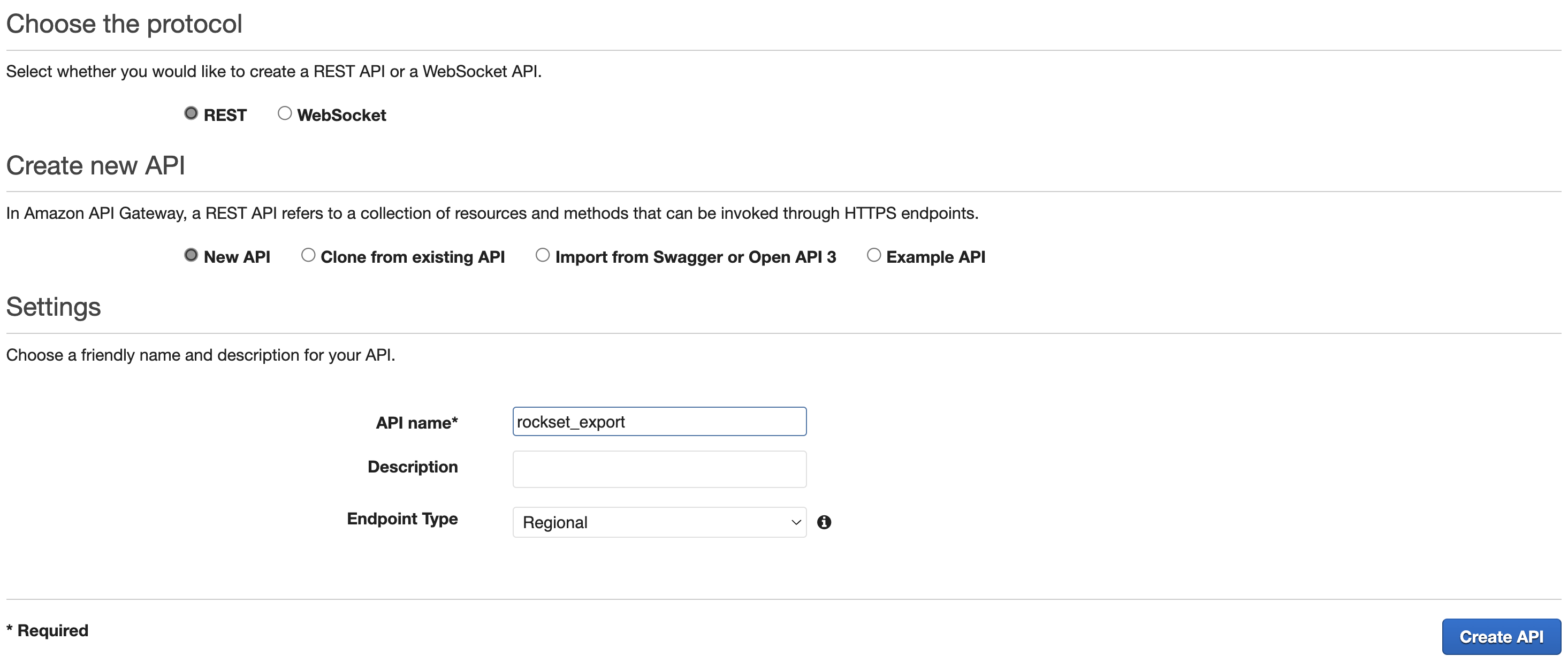

- Create a REST API application in the AWS API Gateway service, we can call it

rockset_export:

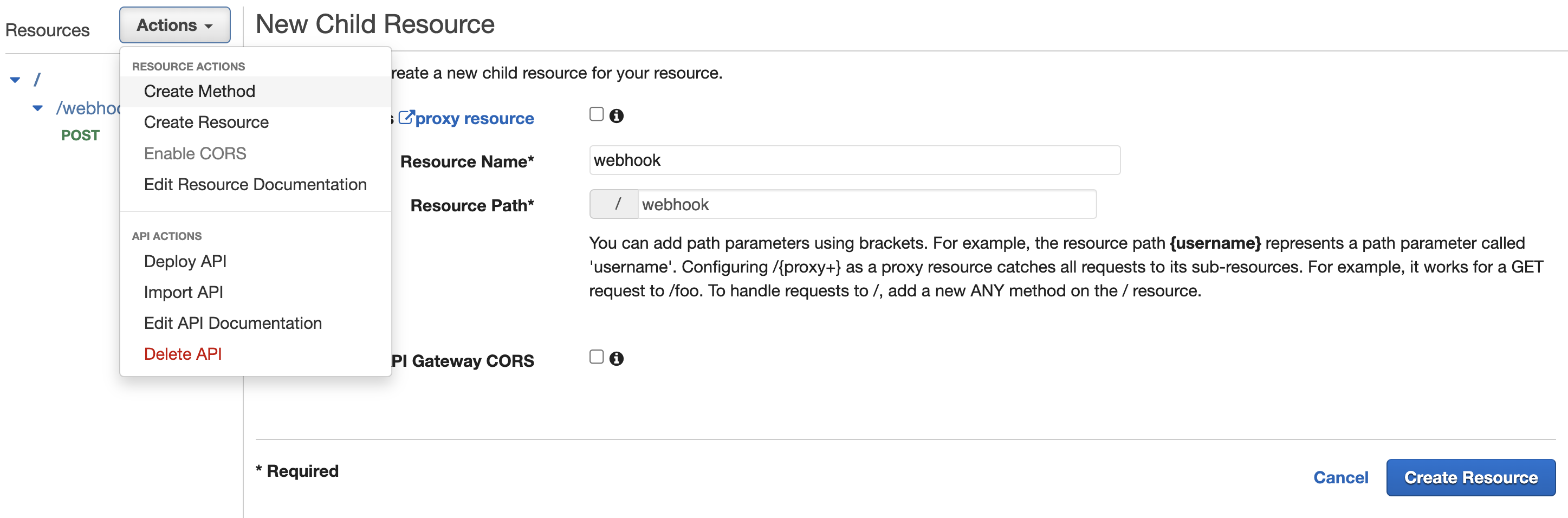

- Create a new resource which our query lambdas will use, we’ll call it

webhook:

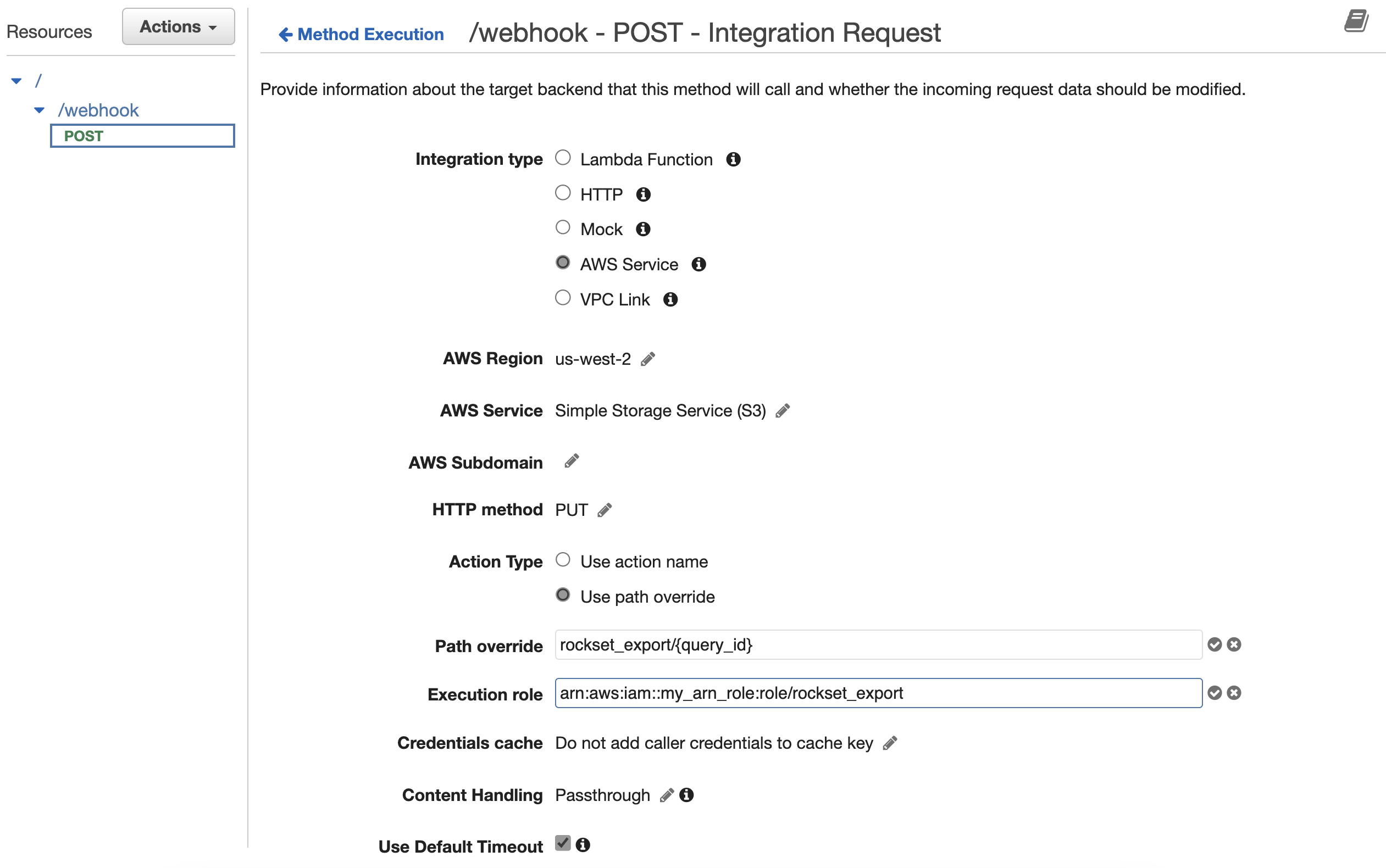

- Create a new POST method using the settings below - this essentially enables our endpoint to communicate with an S3 bucket called

rockset_export:

- AWS Region:

Region for your S3 bucket - AWS Service:

Simple Storage Service (S3) - HTTP method:

PUT - Action Type:

Use path override - Path override (optional):

rockset_export/{query _id}(replace with your bucket name) - Execution role:

arn:awsiam::###:role/rockset_export(replace with your ARN role) - Setup URL Path Parameters and Mapping Templates for the Integration Request - this will extract a parameter called

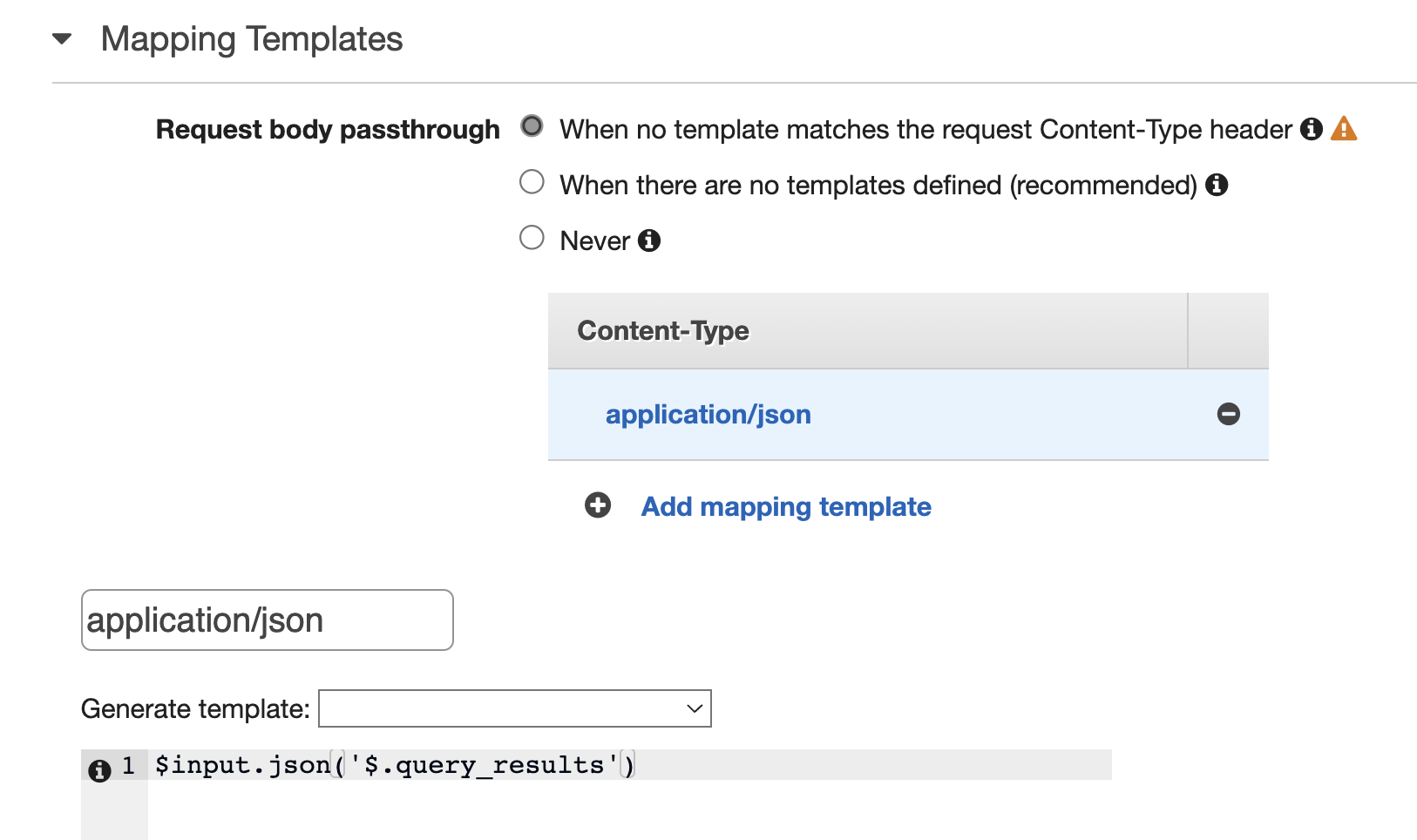

query_idfrom the body of the incoming request (we’ll use this as a name for files saved to S3) andquery_resultswhich we’ll use for the contents of the file (this is the result of our query lambda):

Once that’s done, we can deploy our API Gateway to a Stage and we’re now ready to call this endpoint from our scheduled query lambda.

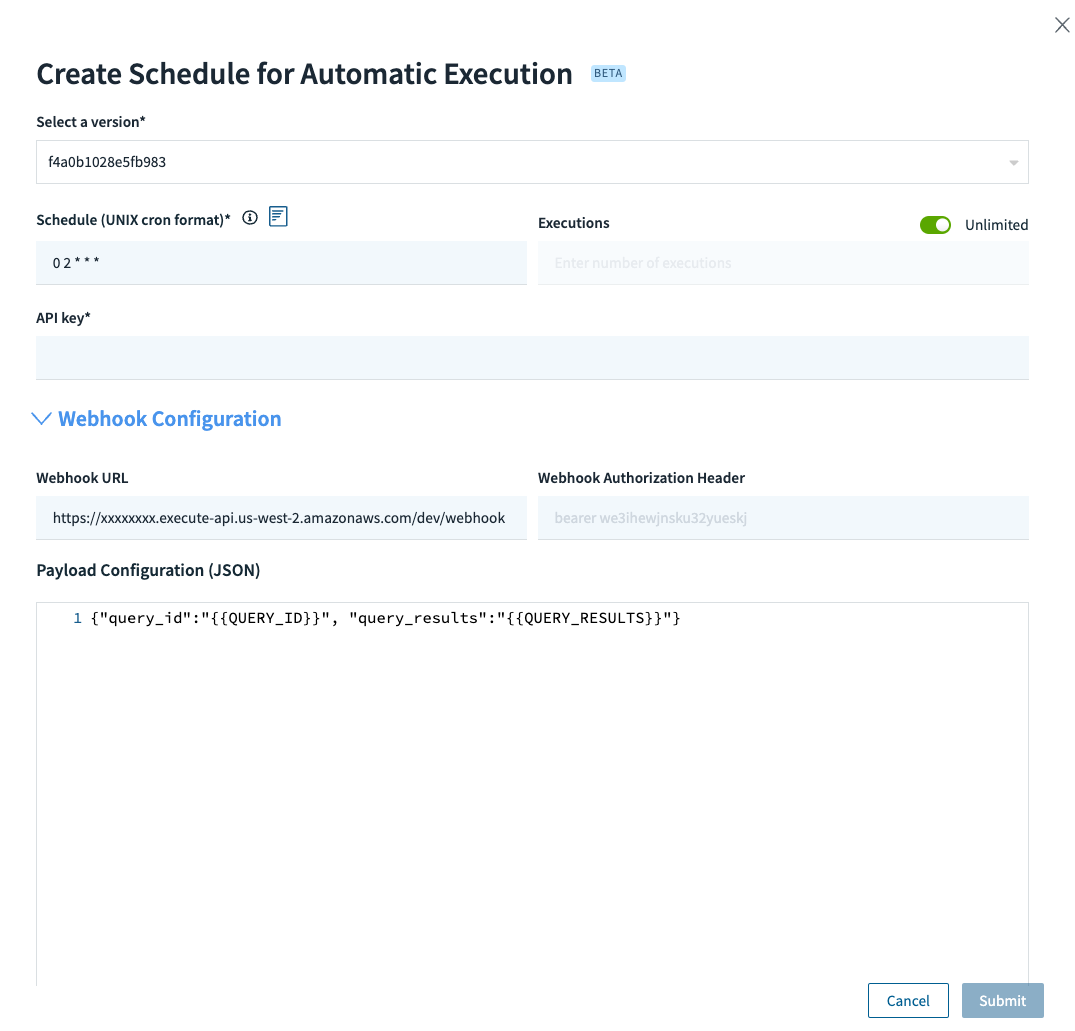

Let’s now configure the schedule for our query lambda. We can use a cron schedule 0 2 * * * so that our query lambda runs at 2 AM in the morning and produces the dataset we need to export. We’ll call the webhook we created in the previous steps, and we’ll supply query_id and query_results as parameters in the body of the POST request:

We’re using {{QUERY_ID}} and {{QUERY_RESULTS}} in the payload configuration and passing them to the API Gateway which will use them when exporting to S3 as the name of the file (the ID of the query) and its contents (the result of the query), as described in step 4 above.

Once we save this schedule, we have an automated task that runs every morning at 2 AM, grabs a snapshot of our data and sends it to an API Gateway webhook which exports this to an S3 bucket.

Use case 4: Scheduled resizing of virtual instances

Rockset has support for auto-scaling virtual instances, but if your workload has predictable or well understood usage patterns, you can benefit from scaling your compute resources up or down based on a set schedule.

That way, you can optimize both spend (so that you don’t over-provision resources) and performance (so that you are ready with more compute power when your users want to use the system).

An example could be a B2B use case where your customers work primarily in business hours, let’s say 9 AM to 5 PM throughout the work days, and so you need more compute resources during those times.

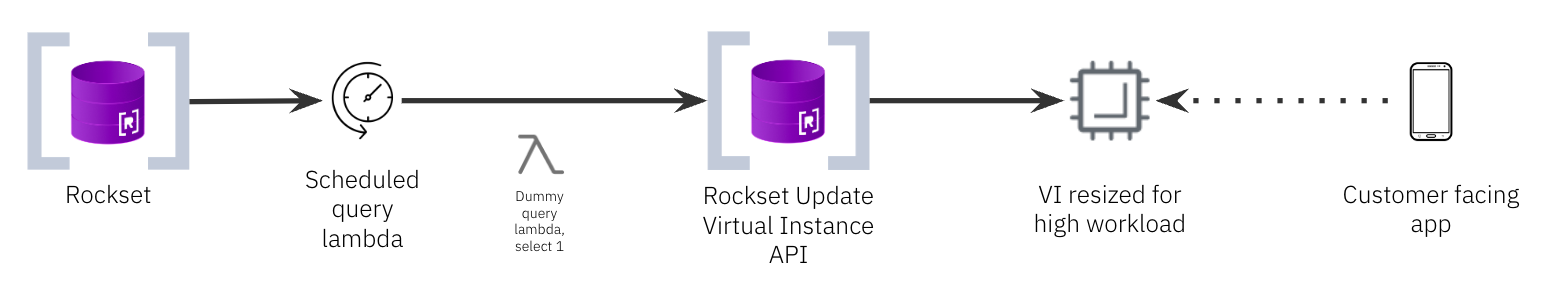

To handle this use case, you can create a scheduled query lambda that will call Rockset’s virtual instance endpoint and scale it up and down based on a cron schedule.

Follow these steps:

- Create a query lambda with just a

select 1query, since we don’t actually need any specific data for this to work. - Create a schedule for this query lambda. In our case, we want to execute once a day at 9 AM so our cron schedule will be

0 9 * * *and we will set unlimited number of executions so that it runs every day indefinitely. - We’ll call the update virtual instance webhook for the specific VI that we want to scale up. We need to supply the virtual instance ID in the webhook URL, the authentication header with the API key (it needs permissions to edit the VI) and the parameter with the

NEW_SIZEset to something likeMEDIUMorLARGEin the body of the request.

We can repeat steps 1-3 to create a new schedule for scaling the VI down, changing the cron schedule to something like 5 PM and using a smaller size for the NEW_SIZE parameter.

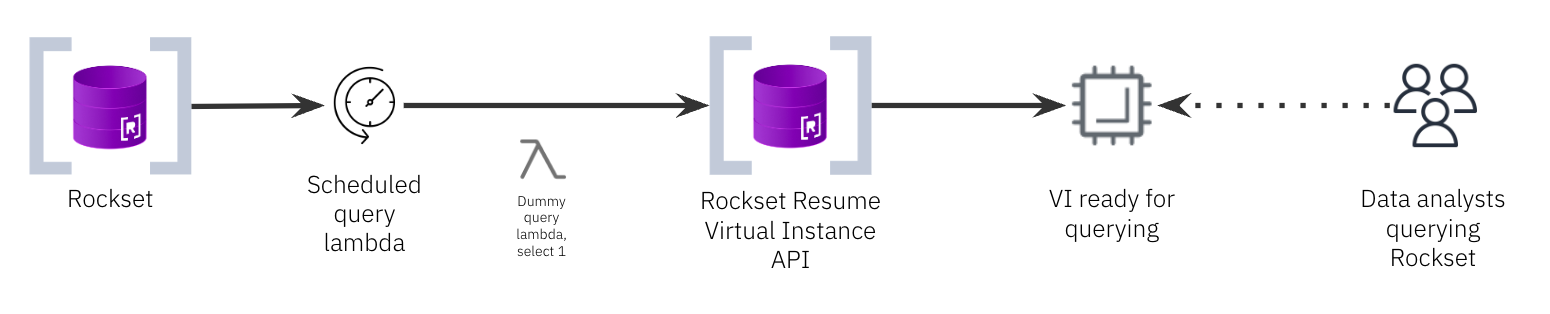

Use case 5: Setting up data analyst environments

With Rockset’s compute-compute separation, it’s easy to spin up dedicated, isolated and scalable environments for your ad hoc data analysis. Each use case can have its own virtual instance, ensuring that a production workload remains stable and performant, with the best price-performance for that workload.

In this scenario, let’s assume we have data analysts or data scientists who want to run ad hoc SQL queries to explore data and work on various data models as part of a new feature the business wants to roll out. They need access to collections and they need compute resources but we don’t want them to create or scale these resources on their own.

To cater to this requirement, we can create a new virtual instance dedicated to data analysts, ensure that they can’t edit or create VIs by creating a custom RBAC role and assign analysts to that role, and we can then create a scheduled query lambda that will resume the virtual instance every morning so that data analysts have an environment ready when they log into the Rockset console. We could even couple this with use case 2 and create a daily snapshot of production into a separate collection and have the analysts work on that dataset from their virtual instance.

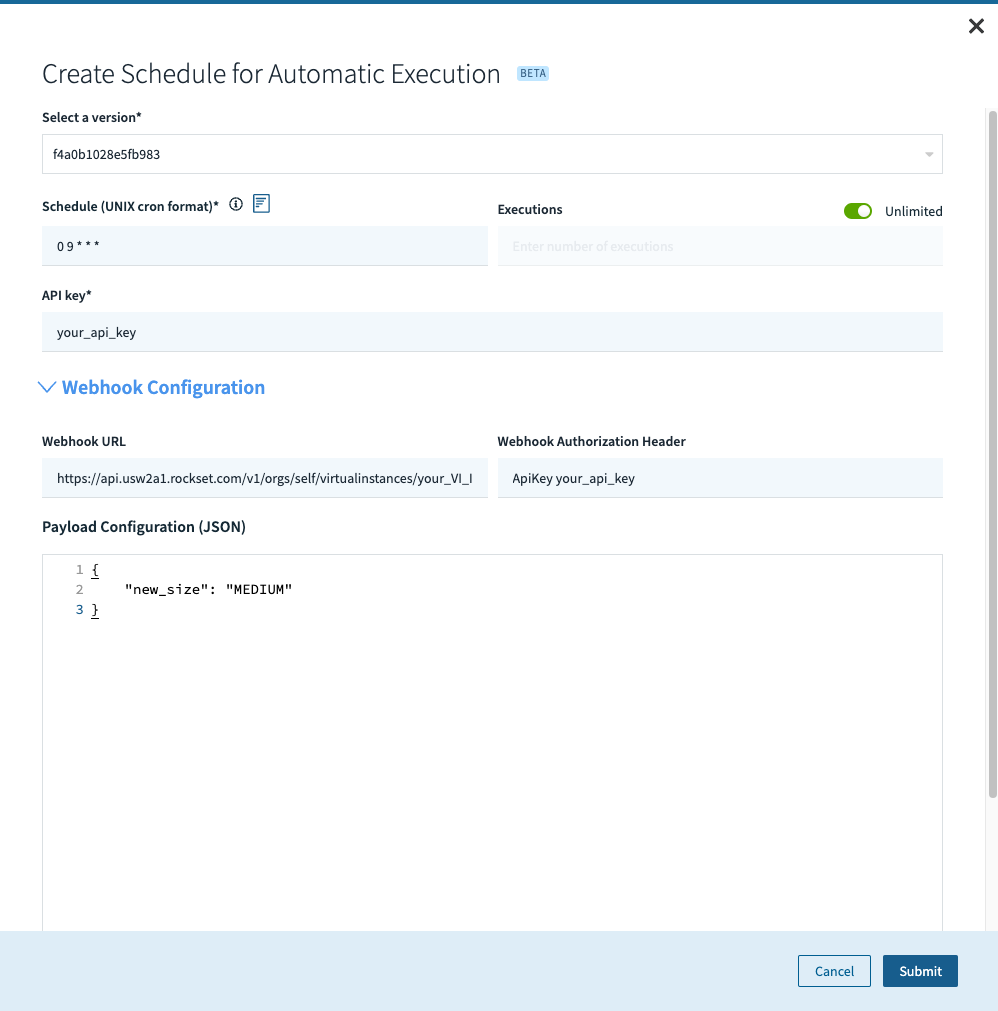

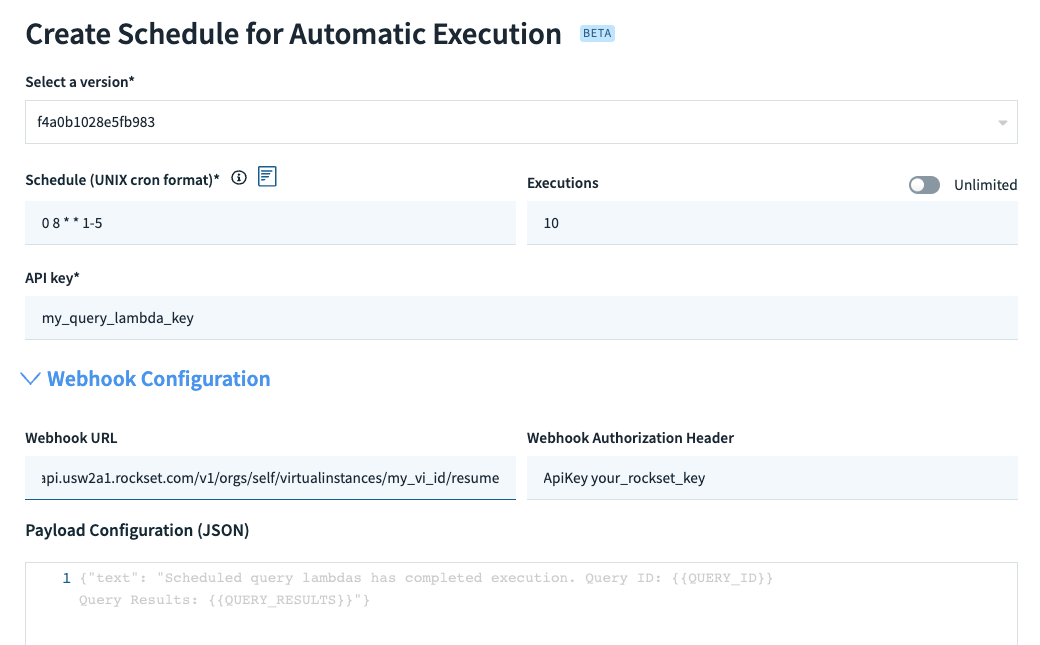

The steps for this use case are similar to the one where we scale the VIs up and down:

- Create a query lambda with just a

select 1query, since we don’t actually need any specific data for this to work. - Create a schedule for this query lambda, let’s say daily at 8 AM Monday to Friday and we will limit it to 10 executions because we want this to only work in the next 2 working weeks. Our cron schedule will be

0 8 * * 1-5. - We will call the resume VI endpoint. We need to supply the virtual instance ID in the webhook URL, the authentication header with the API key (it needs permissions to resume the VI). We don’t need any parameters in the body of the request.

That’s it! Now we have a working environment for our data analysts and data scientists that’s up and running for them every work day at 8 AM. We can edit the VI to either auto-suspend after certain number of hours or we can have another scheduled execution which will suspend the VIs at a set schedule.

Conclusion

As demonstrated above, Rockset offers a set of useful features to automate common tasks in building and maintaining data solutions. The rich set of APIs combined with the power of query lambdas and scheduling allow you to implement and automate workflows that are completely hosted and running in Rockset so that you don’t have to rely on 3rd party components or set up infrastructure to automate repeating tasks.

We hope this blog gave you a few ideas on how to do automation in Rockset. Give this a try and let us know how it works!